Kubernetes

Posit Connect can be configured to run on a Kubernetes cluster within AWS. This architecture is designed for the reliability and scale that comes with a distributed, highly available deployment using modern container orchestration. This architecture is best suited for organizations that already use Kubernetes for production workloads or have specific needs that are not provided by our high availability (HA) architecture.

Architectural overview

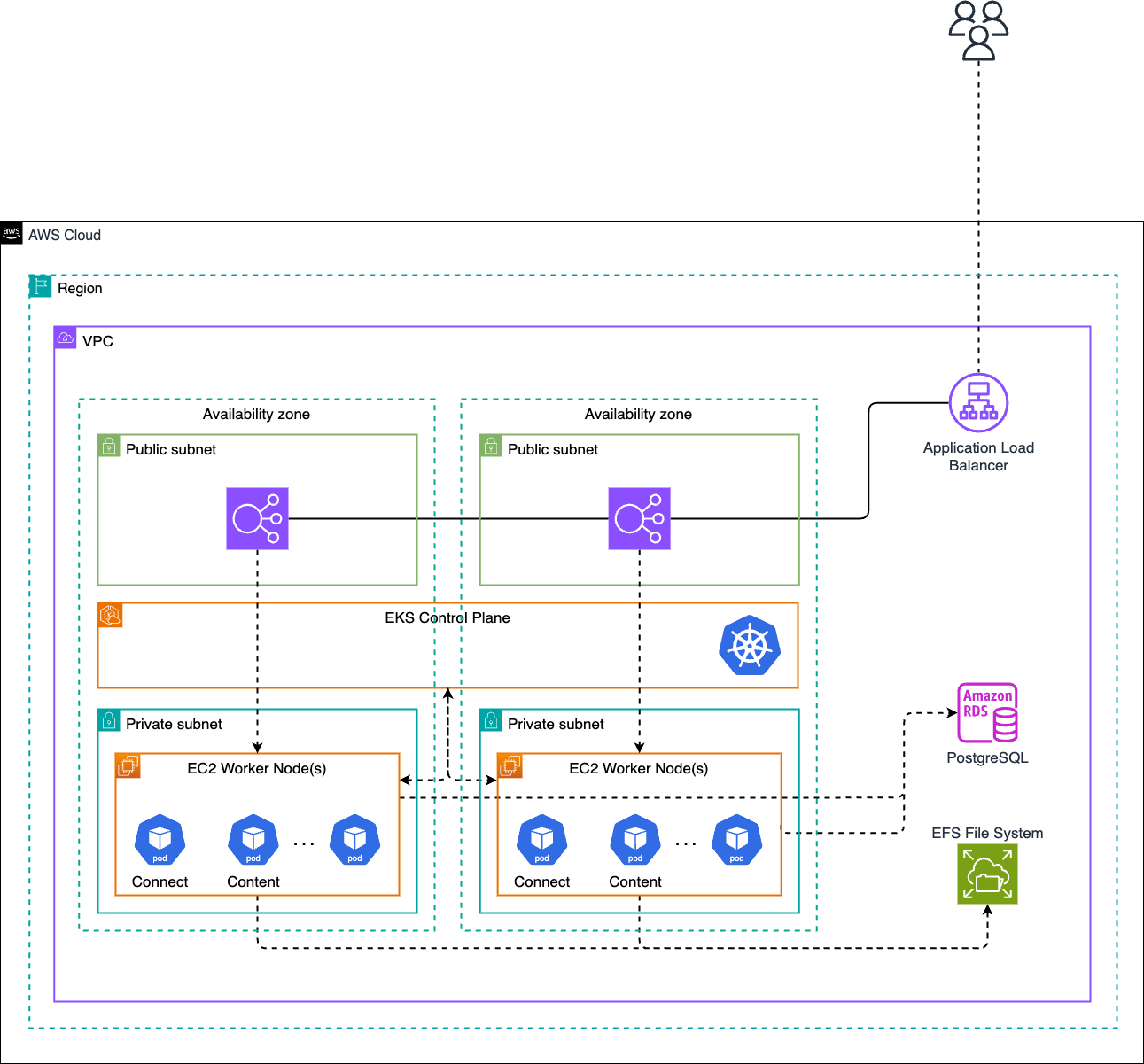

This deployment of Posit Connect utilizes Off-Host Execution and uses the following AWS resources:

- AWS Application Load Balancer (ALB) to route requests to the Connect service.

- AWS Elastic Kubernetes Service (EKS) to provision and manage the Kubernetes cluster.

- AWS Relational Database Service (RDS) for PostgreSQL, serving as the application database for Connect.

- AWS Elastic File System (EFS), a networked file system used to store file data, which is mounted across the Connect services.

Architecture diagram

Kubernetes cluster

The Kubernetes cluster can be provisioned using AWS Elastic Kubernetes Service (EKS).

Nodes

We recommend at least two worker nodes of instance type m6i.2xlarge, but both the number of nodes and the instance types can be increased for more demanding workloads. Your instance needs will depend on the size of your audience for Connect content as well as the compute and memory needs of your data scientist’s applications.

- Worker nodes should be provisioned across more than one availability zone and within private subnets.

- This reference architecture does not assume autoscaling node groups. It assumes you have a fixed number of nodes within your node group.

Database

This configuration utilizes an RDS instance with PostgreSQL running on a db.m5.large instance, provisioned with a minimum of 15 GB of storage and running the latest minor version of PostgreSQL 16 (see supported versions). Both the instance type and the storage can be scaled up for more demanding workloads.

- The RDS instance should be configured with an empty PostgreSQL database for the Connect metadata.

- The RDS instance should be a Multi-AZ deployment and should use a DB subnet group across all private subnets containing worker nodes for the EKS cluster.

Load balancer

This architecture utilizes an AWS Application Load Balancer (ALB) in order to provide public ingress and load balancing to the Connect service within EKS.

- The ALB must be configured with sticky sessions enabled

Networking

The architecture is implemented in a virtual private cloud, utilizing both public and private subnets across multiple availability zones. This setup ensures high availability and fault tolerance for all deployed resources. The RDS database instance, EFS mount targets, and the EC2 instances are located within the private subnets and ingress to the EC2 is managed through an ALB.

Configuration details

The required configuration details are outlined in the off-host execution installation & configuration steps.

Resiliency and availability

This implementation of Connect is resilient to AZ failures, but not full region failures. Assuming worker nodes in separate availability zones, with Connect pods running on each worker node, a failure in either node will result in disruption to user sessions on the failed node, but will not result in overall service downtime.

We recommend aligning with your organizational standards for backup and disaster recovery procedures with the RDS instances and EFS file systems supporting this deployment. These two components, along with your Helm values.yaml file are needed to restore Connect in the event of a cluster or regional failure.

Performance

The Connect team conducts performance testing on this architecture using the Grafana k6 tool. The workload consists of one virtual user (VU) publishing an R-based Plumber application repeatedly, while other VUs are making API fetch requests to a Python-based Flask application.

The first test is a scalability test, where the number of VUs fetching the Flask app is increased steadily until the throughput is maximized. After noting the number of VUs needed to saturate the server, a second “load” test is run with that same number of VUs for 30 minutes, to accurately measure request latency when the server is fully utilized.

Below are the results for the load test:

- Average requests per second: 1929 rps

- Average request latency (fetch): 175 ms

- Number of VUs: 400

- Error rate: 0%

(NOTE that k6 VUs are not equivalent to real-world users, as they were being run without sleeps, to maximize throughput. To approximate the number of real-world users, you could multiply the RPS by 10).

Please note that applications performing complex processing tasks will likely require nodes with larger amounts of CPU and RAM to perform that processing, in order to achieve the same throughput and latency results above. We suggest executing performance tests on your applications to accurately determine hardware requirements.

FAQ

See the Architecture FAQs page for the general FAQ.