Workbench-managed Databricks Credentials

Posit Workbench has a native integration for Databricks that includes support for managed Databricks OAuth credentials and a dedicated pane in the RStudio Pro IDE.

Workbench-managed credentials allow you to sign into a Databricks workspace from the home page and be immediately granted access to data and compute resources using your existing Databricks identity. Managed credentials avoid the burden (and risk) of managing Databricks personal access tokens (PATs) yourself, and work with the Databricks CLI, most official packages or SDKs, and database drivers for Python and R.

Anything that implements the Databricks client unified authentication standard will pick up the ambient credentials supplied by Workbench.

Workbench-managed Databricks credentials are refreshed automatically while your session is active.

Starting a Session with Workbench-managed Databricks credentials

If your administrator has configured and enabled the Databricks integration, a new drop-down displays in the New Session dialog. This allows you to select which Databricks workspace to connect to.

Select an option and then begin the authentication flow by clicking Sign In if not already authenticated. Following the success of this process, the Sign In button updates to show that you are now signed in.

You can only start a session if you have signed in with that Databricks workspace. Selecting None will always allow you to begin a new session without any managed credentials.

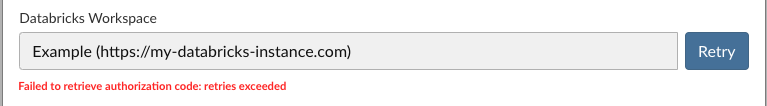

If an error occurs in the authentication flow or too much time elapses between initiating the process and completing it, an error is displayed below the drop-down, and the Sign In button is replaced with the option to Retry the process.

If your Workbench administrator has enabled managed Databricks credentials, providing your own PATs or host environment variables in a .env, .databrickscfg, .Renviron or other files may interfere with Workbench-managed credentials and lead to inconsistent behavior. If you want to opt out of managed credentials, select None from the drop-down menu when starting sessions.

Databricks CLI

The Databricks CLI wraps the Databricks REST API, and provides access to Databricks account and workspace resources and data. For more information, see the Databricks REST API reference or the Databricks CLI documentation. Additional credentials are not needed when using the CLI with the Databricks auth integration inside Posit Workbench.

# List cluster metadata

databricks clusters get 1234-567890-a12bcde3Databricks with R

Workbench-managed Databricks credentials have been validated to work with sparklyr, ODBC with the Posit Pro Driver for Databricks, and the Databricks R SDK. For more information about using the Databricks pane to easily manage clusters or sparklyr connections, or about the Connections pane in RStudio Pro, see RStudio Pro: Databricks.

sparklyr version 1.8.4 or higher works with Workbench-managed credentials and modern Databricks Runtimes that make use of Databricks Connect (ML 13+). This is accomplished via reticulate and an additional pysparklyr package that powers sparklyr. For more information and installation instructions see the sparklyr package documentation.

Example code to use sparklyr is included below. When used with Workbench-managed credentials, the usual DATABRICKS_TOKEN and DATABRICKS_HOST environment variables are omitted.

library(sparklyr)

sc <- spark_connect(

cluster_id = "[Cluster ID]",

method = "databricks_connect",

dbr_version = "14.0"

)While some database operations can also be performed with sparklyr, similar workflows can be used for ODBC connections, whether for raw SQL code via DBI or with dbplyr:

library(DBI)

library(odbc)

library(dplyr, warn.conflicts = FALSE)

library(dbplyr, warn.conflicts = FALSE)

## AWS ----

conn_aws <- dbConnect(

odbc::odbc(),

dsn="Databricks AWS",

PWD = Sys.getenv("DATABRICKS_TOKEN_AWS")

)

## Azure ----

conn_azure <- dbConnect(

odbc::odbc(),

dsn="Databricks Azure",

PWD = Sys.getenv("DATABRICKS_TOKEN_AZURE")

)

tbl(conn_aws, in_catalog("samples","nyctaxi","trips")) %>% count()

tbl(conn_azure, in_catalog("samples","nyctaxi","trips")) %>% count()The R Databricks SDK can be used for general Databricks operations:

library(databricks)

client <- DatabricksClient()

# List clusters

clustersList(client)[, "cluster_name"]Databricks with Python

Workbench-managed credentials are expected to work with Python tools that adhere to the Databricks client unified authentication standard. The following have been tested in Posit Workbench: pyodbc, Databricks VS Code extension, Databricks CLI, Databricks SDK for Python, and pyspark via Databricks Connect.

pyodbc has been validated with Posit’s Databricks Pro Driver in VS Code.

import configparser

import os

import pyodbc

import re

# open the DATABRICKS_CONFIG_FILE to get oauth token information

config = configparser.ConfigParser()

config.read(os.environ["DATABRICKS_CONFIG_FILE"])

# Replace <table-name> with the name of the database table to query.

table_name = "samples.nyctaxi.trips"

databricks_host = os.environ["DATABRICKS_HOST"]

databricks_workspace_id = "138962681435081"

databricks_cluster_id = "1108-152427-dq9mgl"

databricks_http_path = (

f"sql/protocolv1/o/{databricks_workspace_id}/{databricks_cluster_id}"

)

databricks_oauth_token = config["workbench"]["token"]

# Setup the connection string for the Databricks instance

connection_string = f"""

Driver = /opt/simba/spark/lib/64/libsparkodbc_sb64.so;

Host = {databricks_host};

HTTPPath = {databricks_http_path};

Port = 443;

Protocol = https;

UID = token;

SparkServerType = 3;

Schema = default;

ThriftTransport = 2;

SSL = 1;

AuthMech = 11;

Auth_Flow = 0;

Auth_AccessToken = {databricks_oauth_token};

"""

# remove spaces from connection string

connection_string = re.sub(r"\s+", "", connection_string)

# connect to the Databricks instance

conn = pyodbc.connect(connection_string, autocommit=True)PySpark is a Python API for working with Apache Spark and can be used with Databricks managed Spark environments. It can be used for SQL or dataframe operations as well as machine learning algorithms via MLlib.

from pyspark.sql import SparkSession

spark: SparkSession = spark

print("Hello from Databricks")

spark.sql("select * from samples.nyctaxi.trips").show(3)The .databrickscfg file that Posit Workbench manages for you has a profile called “workbench” containing the short-lived tokens and other metadata for connecting.

from databricks.connect import DatabricksSession

from databricks.sdk.core import Config

# Optional to specify the profile

config = Config(profile="workbench", cluster_id="1234-abcdef-5678hijk")

spark = DatabricksSession.builder.sdkConfig(config).getOrCreate()

# PySpark code executed on Databricks Cluster

df = spark.read.table("samples.nyctaxi.trips")

df = df.filter(df.trip_distance > 0.5)

df_pd = df.limit(3).toPandas()

# Python code executed on local machine

print(df_pd)Python SDK

This brief example prints the available clusters based on your user profile, using the Databricks profile (profile="workbench") that Workbench-managed credentials provides.

from databricks.sdk import WorkspaceClient

# Connect to the Databricks instance

# Optional to specify the profile

w = WorkspaceClient(profile="workbench")

# Retrieve the list of clusters

clusters = w.clusters.list()

# Print the names of the clusters

for c in clusters:

print(c.cluster_name)